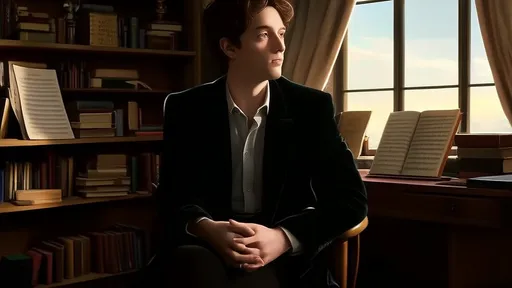

The intersection of music and artificial intelligence has always been a fertile ground for innovation, but few explorations have been as compelling as the application of Sergei Rachmaninoff’s harmonic language to emotion-driven algorithms. The Russian composer’s lush, chromatic harmonies and sweeping melodic lines have long been celebrated for their emotional depth, making them an ideal framework for computational models that seek to replicate or even enhance human-like emotional expression in music. Researchers and musicians alike are now delving into what can be termed the "Rachmaninoff Emotional Harmony Algorithm," a synthesis of his harmonic principles and modern machine-learning techniques.

Rachmaninoff’s music is often described as a bridge between the Romantic era and the modern age, characterized by its rich textures and complex emotional undertones. His harmonies—frequently shifting between melancholy and ecstasy—lend themselves remarkably well to algorithmic interpretation. By breaking down his chord progressions, voice-leading techniques, and dynamic shifts into quantifiable data, engineers have begun training neural networks to generate music that mirrors his signature style. The goal isn’t mere imitation but the creation of an adaptive system that can compose original pieces imbued with the same emotional resonance as Rachmaninoff’s works.

One of the most intriguing aspects of this endeavor is the challenge of encoding subjectivity. Emotion in music is inherently personal, yet Rachmaninoff’s harmonies possess a universality that transcends individual interpretation. To capture this, algorithms are being fed not only sheet music but also recordings, listener feedback, and even physiological data from audiences—heart rate variability, skin conductance—to map how his harmonies evoke specific emotional responses. This multi-modal approach allows the algorithm to learn not just the "what" of Rachmaninoff’s music but the "why" behind its emotional impact.

The implications of such technology extend far beyond academic curiosity. Imagine a film scoring tool that dynamically adjusts its harmonic palette to intensify a scene’s emotional weight, or a therapeutic application that generates calming, Rachmaninoff-inspired melodies tailored to a listener’s mood. Already, early prototypes have demonstrated an uncanny ability to replicate the composer’s stylistic hallmarks: the aching suspensions in "Vocalise," the triumphant climaxes of his piano concertos, the brooding introspection of his preludes. Yet the true test lies in whether these algorithms can move beyond pastiche to produce something genuinely novel while retaining the emotional authenticity of their inspiration.

Critics, of course, abound. Some argue that reducing Rachmaninoff’s genius to data points risks stripping his music of its soul, while others question whether an algorithm can ever truly "understand" emotion in the way humans do. Proponents counter that these tools are not replacements for human composers but collaborators—orchestrating new possibilities rather than mechanizing creativity. As the field progresses, one thing is certain: the marriage of Rachmaninoff’s harmonies with AI challenges our very definitions of artistry, emotion, and the boundaries between human and machine expression.

What makes this pursuit particularly timely is its resonance with contemporary debates about AI’s role in creative fields. Unlike purely generative models that churn out music at random, the Rachmaninoff algorithm is grounded in a specific, deeply humanistic tradition. It forces us to confront questions about intentionality: Can a machine "intend" to evoke sadness or joy, or is it merely simulating those effects? The answers may lie in the listener’s experience. If an algorithm can move an audience to tears with a Rachmaninoff-esque adagio, does it matter whether the emotion was pre-programmed or emergent?

The technical hurdles remain significant. Rachmaninoff’s harmonies often defy textbook analysis, relying on subtle inflections and context-dependent resolutions that are devilishly hard to codify. His use of chromaticism, for instance, isn’t just about adding notes but about creating tension that feels inevitable when resolved. Teaching this to an algorithm requires more than pattern recognition; it demands a form of musical intuition. Some researchers are tackling this by incorporating cognitive science models of how the brain processes expectation and surprise in music, effectively giving the algorithm a crude "sense" of anticipation.

Ethical considerations also loom large. Rachmaninoff’s estate, like those of many late composers, guards his legacy carefully. Should an algorithm-generated piece in his style be credited to him, to the programmers, or to some hybrid entity? And who owns the copyright to such creations? These questions echo broader concerns about AI and intellectual property but gain added poignancy when applied to a composer whose works are so deeply tied to his personal struggles and triumphs.

Despite these challenges, the Rachmaninoff Emotional Harmony Algorithm represents a fascinating frontier in both music and technology. It’s a testament to the enduring power of his compositions that they can inspire not just performances and interpretations but entire computational paradigms. As the project evolves, it may well redefine how we think about the relationship between emotion and structure in music—proving that even in the age of AI, the human heart remains the ultimate composer.

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025

By /Jul 17, 2025